We're now living in a world where high-resolution displays are everywhere. From large TVs to smartphones, the demand for high-quality images has also increased. That's why Google Research introduced a new technology named RAISR (Rapid and Accurate Image Super-Resolution) last year, in an attempt to harness the power of machine learning to produce high-quality versions of low-resolution images. According to Google, its new technology is also ready for mobile use:

"RAISR produces results that are comparable to or better than the currently available super-resolution methods, and does so roughly 10 to 100 times faster, allowing it to be run on a typical mobile device in real-time."

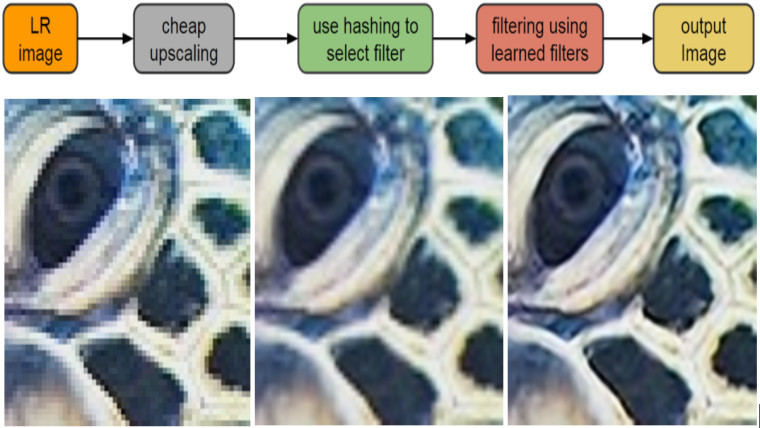

The company explained that RAISR uses machine learning to train the algorithm on a pair of images, one low quality, the other high quality. This training allows the selection of filters that are applied to each pixel of the low-resolution image in order to recreate details that are of comparable quality to the original, as you can see in the image below.

Bottom: Low-res original (left), bicubic upsampler 2x (middle), RAISR output (right).

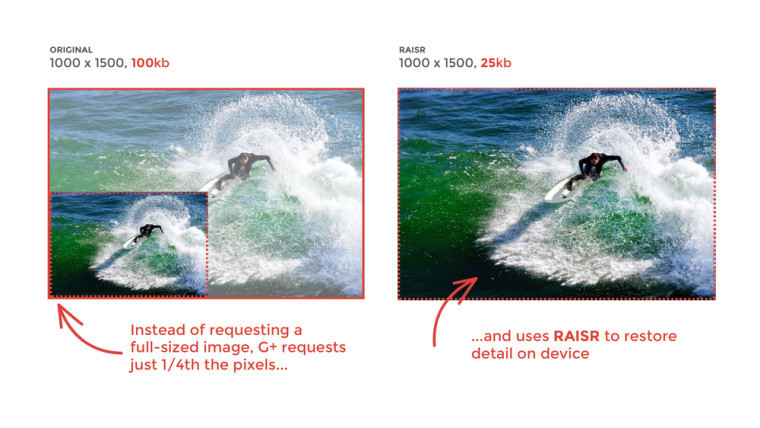

But Google seems to have found another use for the RAISR technology, according to a blog post this week. The company has stated that by using RAISR to display some of the large images on Google+, it was able to reduce the use of bandwidth by up to 75 percent per image. That was achieved because the reconstruction of a low-resolution image creates a new file smaller than the original, maintaining a comparable quality, as explained above.

Finally, Google has stated that only a subset of Android devices is already using the technology, as it has just started rolling it out. Nevertheless, RAISR is currently being used on more than 1 billion images each week, and has saved about a third of the total bandwidth used by the owners of those devices.

Source & Images: Google Blog via The Verge

_plus_bug_small.jpg)

20 Comments - Add comment