After numerous rumours of its return to the discrete GPU (dGPU) space - remember the i740 and Project Larrabee? - Intel confirmed its intentions to the outside world by hiring one of the most renowned names in the business, and the former chief GPU architect for AMD, Raja Koduri, to head up its effort for an in-house dGPU.

At the IEE International Solid-State Circuits Conference that was hosted in San Francisco this past week, the company unveiled the early results of its efforts in the space with a prototype GPU based on a 14nm process.

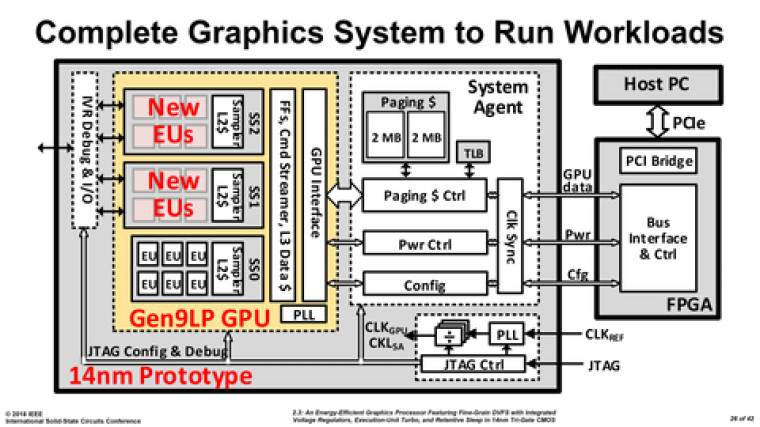

The prototype is said to sport 1.542 billion transistors and will feature two primary chips - the first containing the actual GPU and a system agent and the second housing a field programmable gate array (FPGA).

The chip is said to only be a proof of concept at this time, and no real indicators of its performance were provided. The chipmaker is, however, making efficiency one of its core focuses in the development of the technology in an effort to mimic the success of its CPU lineup.

Given the chip's status as a simple prototype, it's unlikely that we'll see this exact product ever come to market, but its reveal does indicate that Intel is getting serious about competing with Nvidia and AMD for the high-end of the GPU market, no longer satisfied with simply providing the integrated graphics found on most Core i processors.

Update: Intel has issued the following statement regarding its presentation at the ISSCC conference, clarifying that the presentation was not for a future product, and was instead a research paper pertaining to new power management techniques:

Last week at ISSCC, Intel Labs presented a research paper exploring new circuit techniques optimized for power management. The team used an existing Intel integrated GPU architecture (Gen 9 GPU) as a proof of concept for these circuit techniques. This is a test vehicle only, not a future product. While we intend to compete in graphics products in the future, this research paper is unrelated. Our goal with this research is to explore possible, future circuit techniques that may improve the power and performance of Intel products.

Source and image: PC Watch via Lowyat.net

23 Comments - Add comment