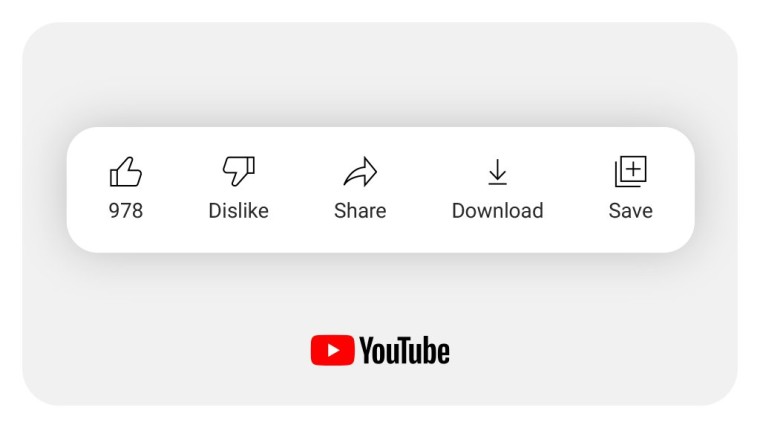

Yesterday, YouTube announced that it will be making dislike counts on videos private moving forward. The reasoning behind this decision is that the company wants to protect its creators from harassment and reduce "dislike attacks". The idea is to foster a positive, inclusive, and respectful environment where creators feel safe. While this sounds great on paper, I personally think this is a terrible idea that reduces the integrity and transparency of the platform even further.

YouTube has stated that it made this decision after conducting some experiments earlier this year. The company tested this with a subset of creators who couldn't opt out of it, and the results apparently showed that they were now less prone to dislike attacks. YouTube goes on to say that this malicious behavior impacts smaller channels as well, something that affected creators have complained about directly. The problem? YouTube has not made any of the results public. We don't have any charts to look at, we don't have any survey results, nada. I don't even know if this decision was based completely on the feedback of the creators or whether viewers were surveyed too - I know I wasn't. Instead, the company has tucked a small footnote on its announcement blog post, saying that "Analysis conducted July 2021", without any link to the results This only leads me to further question the sample size and the integrity of YouTube's experimentation. Is this something that massively impacts creators or is it just something that the company has found to be beneficial to its bottomline? No clue, but the lack of transparency is concerning.

Moving on to how this impacts viewers such as myself. Like many other platforms, YouTube doesn't discuss its discovery algorithm in too much detail. I was able to find some documentation which suggested that the following factors play a role in what video is being suggested to a viewer:

- what they watch

- what they don’t watch

- how much time they spend watching

- likes and dislikes

- ‘not interested’ feedback

That said, it doesn't really go into too much detail for obvious reasons. I don't have any metrics to understand how reliable this algorithm is and I've noticed in my personal experience that if I watch a couple of baseball videos, most of my recommendations become filled with baseball videos. If I watch a couple of live concert performances by Linkin Park, suddenly that's all that YouTube wants me to see.

But why is the discovery algorithm important? Let me describe a theoretical but close to real use-case to you. Suppose I search for some walkthrough related to The Legend of Zelda: Breath of the Wild (I did that a lot when I was playing the game, sue me). I get served some videos in search results. The top two videos are 15 minutes in duration, both have roughly 100,000 views. Video A has has 1,200 likes and 700 dislikes whereas Video B has 600 likes and 5 dislikes. Based on my past experience with YouTube, I immediately understand that I shouldn't waste my time on Video A because the ratio between likes and dislikes is so low. Video B is probably worth more of my time. This is what the situation is currently like.

However, if we view this in the context of YouTube's proposed design decision, the dislike count is private to the creator, and as a viewer, I am far more likely to consider watching Video A because of its higher like count. Even though Video B is better as discussed above, I waste my time on Video A just because YouTube has decided to hide indicators that would let me make informed decisions.

One could argue that I should skim through the comments below both the videos to decide which video is worth watching, and while that might work, it would also require more effort and time from my side. It is likely something that will eventually become cumbersome for me, and defeats the purpose of discovery algorithms. You could also argue that maybe YouTube will recommend me Video B first in search results and place Video A lower, but I don't always make my viewing decisions based on where the algorithm has decided to place a video. I have various other indicators like the video title, the channel that uploaded it, and the number of views. That means that I might still be tempted to click on Video A, which is not a good choice.

Finally, let's talk more about YouTube's claim about the removal of dislike counts creating a more positive environment. Won't the same people creating a toxic culture just transition their tactics to the comments sections? Isn't it arguably worse to read negative comments about yourself in words rather than them being hidden behind a dislike count? Maybe this wasn't shown or catered to in YouTube's experimentation with its sample size, but I think this behavior is highly likely when YouTube is changing up the playing field for everyone altogether.

Microsoft has detailed findings on how online civility has been deteriorating over the past few years. Toxic people will find newer ways to portray negativity. I guess the point I'm trying to convey is that hiding the dislike count doesn't really solve the root problem, just changes its modality. Creators who care about dislike counts likely care about comments from viewers too.

At this point, YouTube is just asking us to blindly place trust in its algorithm and believe that its changes will impact online culture positively. My argument against this is that without hard facts and figures, I find it difficult to believe that making dislike counts private will bring upon a massive positive change. Rather, I see a huge potential for viewers being mislead into watching videos that are actually less beneficial to them and malicious actors still finding a way to bombard content with negativity via the comments section or other means.

60 Comments - Add comment